As we all know, one of the valuable use-cases for immersive visualisation is collaborative design review. Inspecting components, looking for issues, providing feedback - but some of these things can be hard to do while wearing a headset. How does one add notes to a part to identify an issue, immersively? On-screen keyboard that you point at with the laser pointer? Maybe a little hard to use.

How about just speaking naturally?

Azure Speech Recognizer

Microsoft’s Azure platform has some very good speech-to-text services, and so I would like to expose that functionality to Visionary Render – I can do this by using the native VisRen API and combining that with the C# APIs provided by Microsoft for interacting with the Azure speech recogniser.

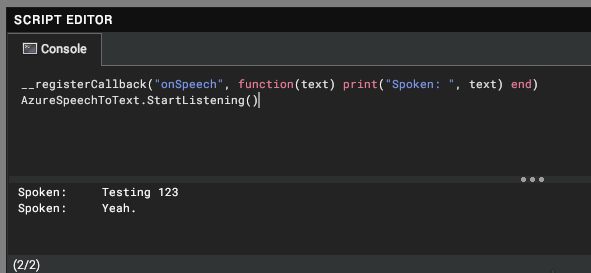

The result is a generic plugin which provides the ability to start recognising speech and turning it into text. It then exposes that functionality to the built in Lua scripting environment using a new callback that it defines – “onSpeech”.

Now with only these two lines of script in VisRen I start to get useful functionality, immediately. Once it starts listening it continually calls my function and provides me with the text that was spoken, separated when the recogniser detects a natural break in my speech.

Doing something with the text

Using this basic functionality, I can use the Lua API provided by VisRen to affect the behaviour of the application based on what is spoken. The great thing about the Lua API is that it can also be used to create plugins, meaning I can wrap up some useful functionality and make use of it in any scene.

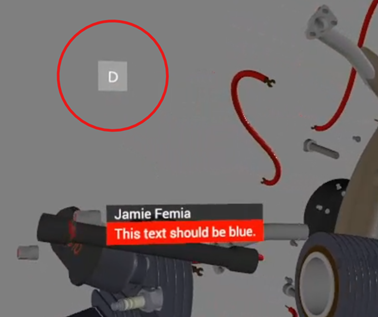

My plugin integrates this with the annotation system – specifically by adding a small floating button on screen for whichever annotation is active. Pressing the button starts listening, and then anything I say is added as a comment on the annotation. Pressing the button again stops listening.

Since this is integrating with the existing annotation feature, once the comments have been added you can make use of these just like any manually entered ones – including using the APIs to export them (there’s an example of this on our GitHub)

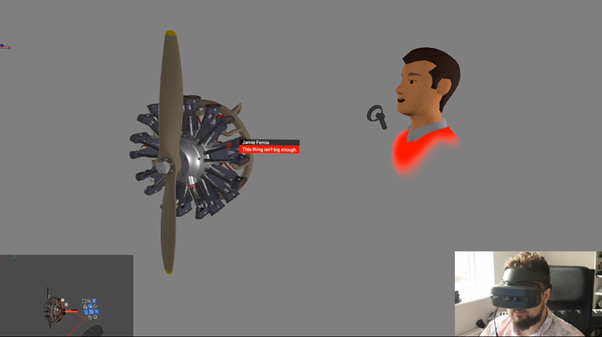

This is very useful if you are using an immersive device like a head-mounted display. Whether you are standing up or sitting down, it is inconvenient to be reaching for a keyboard to type notes while wearing a headset; you probably need to lift it off your eyes so you can see, and this clearly breaks the flow of your work.

Using your voice is a great way to work when both of your hands are holding VR controllers.

Other uses

There are other things that could be controlled by your voice, not just annotations. You might want to get rid of the dictation and annotation placement controls and instead use key commands words like “annotate this” to add an annotation to a selected part and start listening.

Many other aspects of the application are also exposed to the APIs, so these could be controlled by voice, too. For example:

- Application commands like load, save, new, exit

- Enabling and disabling tools like measurement, or manipulators

- Creating basic geometry for space-claim tasks

Demo

I recorded a short video showing this in action